AI Inference on the AIR-T with TensorRT¶

The goal of this tutorial is to demonstrate execution of a neural network inference engine on the AIR-T by using the AIR-T to receive a signal, preprocess the associated signal samples, perform the neural network inference, and compare the results to expected calculations. This tutorial will not cover the training of a neural network. Instead, it provides an example neural network with static, pre-defined, weights that calculates the average power of a batch of complex-valued signal samples.

Background¶

For this tutorial, we will use the inference tools in our open source airstack-examples library, which provides a working example of how to perform neural network inference on the AIR-T. In this tutorial, we demonstrate a simple neural network model with a single output node that calculates the average of the instantaneous signal power across batches of signal samples received by the AIR-T's analog input. The source of the signal is at the discretion of the user: it can be a specific signal source, such as that from a signal generator, or merely the unconnected analog input to the AIR-T's transceiver.

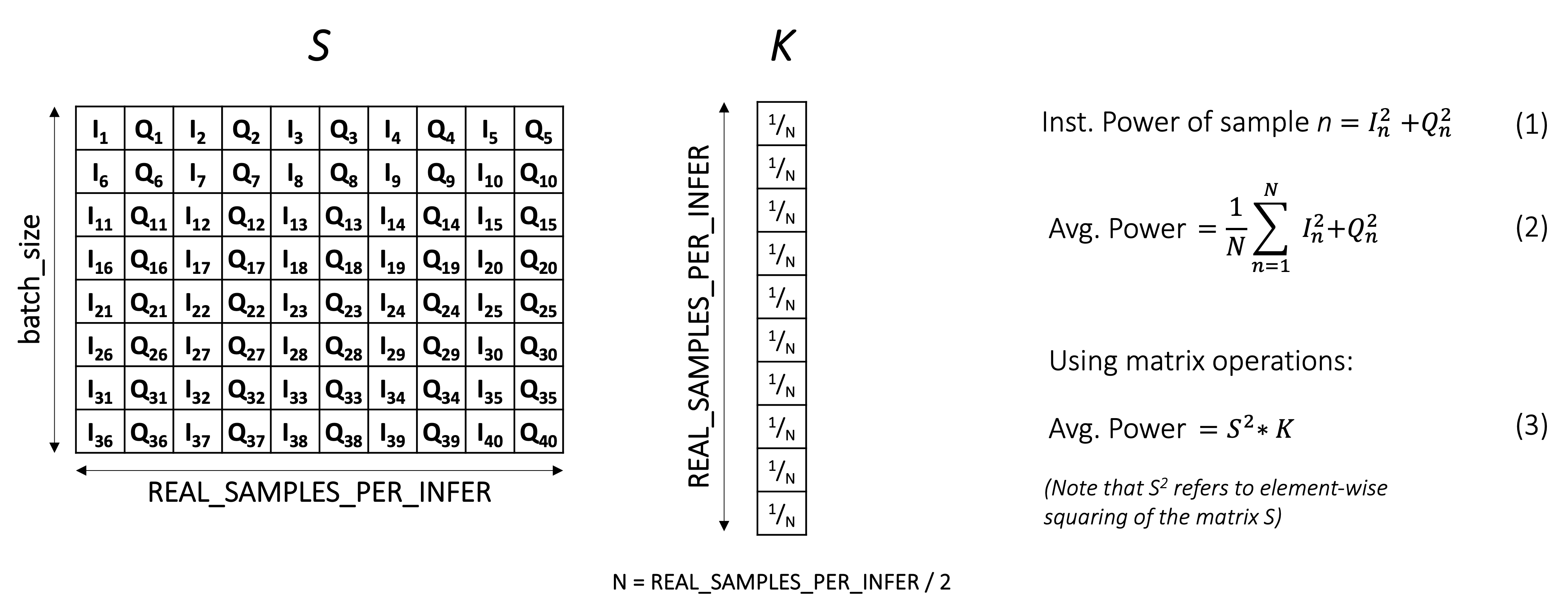

The figure below shows the calculations for computing the average power of a set of signal samples:

In this figure, the buffer size is 40 complex valued samples (80 real samples), with In representing the in-phase (real) component and Qn representing the quadrature (imaginary) component of each sample. A common method to calculate the average power of the samples in this buffer is to compute the instantaneous power for each sample as the sum of In2 + Qn2 as shown as Equation (1) and to then calculate the average of these values across the buffer using Equation (2).

An alternative method to calculating the power is to formulate the problem as a set of matrix operations. The 40-complex element buffer (again, 80 real samples) may be restructured into an 8 x 10 matrix of real samples, represented by S in the figure. If we then define a 10-element vector, K, such that each element has the value 1/N, then the matrix operations shown in Equation (3) are mathematically equivalent to Equation (2). Said differently, the average power of each row in S is computed by the matrix multiplication. This set of operations is also equivalent to a fully-connected neural network layer without a bias operation and represents a simple neural network that will be used in the following steps to demonstrate how to perform inference on the AIR-T.

Creating the Neural Network¶

The airstack-examples toolbox demonstrates how to create a simple neural network for TensorFlow, PyTorch, and MATLAB on a host computer. Installation of these packages for training is made easy by the use of Conda environment management tools (Anaconda). For the inference execution, all Python packages and dependencies are pre-installed on AIR-Ts running AirStack 0.3.0+. The output of each of these frameworks is a model file in an ONNX format, an open standard for representing machine learning models. Generally speaking, the AIR-T supports inference on any model that uses the ONNX format.

The Python code to create this neural network is provided for PyTorch, TensorFlow, and MATLAB:

- PyTorch:

airstack-examples/inference/pytorch/make_avg_pow_net.py - TensorFlow:

airstack-examples/inference/pytorch/make_avg_pow_net.py - MATLAB:

airstack-examples/inference/matlab/make_avg_pow_net.m

When run, each of these functions will output a file called avg_pow_net.onnx. For convenience, we will use the airstack-examples/inference/pytorch/make_avg_pow_net.py file for this tutorial.

Creating the Inference Conda Environment¶

The first step is to create and activate a Conda environment in which to perform inference. This environment can be defined using the YAML file that is pre-installed on your AIR-T in /opt/deepwave/AIR-T_QuickStart_Apps and that is also used in airstack-examples. While the most up-to-date publicly available versions of these file are in our GitHub repository, the version that is pre-installed on your AIR-T will work best with your current AirStack version. From a terminal on your AIR-T, type the following commands:

-

Create the

airstack-inferconda environment:This step may take up to 10 minutes.conda env create -f \ /opt/deepwave/AIR-T_QuickStart_Apps/conda/environments/airstack.yml -

Activate the conda environment:

conda activate airstack

Copying the Inference Files Folder¶

The directory on the AIR-T containing the inference example, /opt/deepwave/AIR-T_QuickStart_Apps, is read-only; to use the example files, they must be copied to a directory for which you have read/write permissions. The following command, for example, will copy these files to your home directory.

cp -r /opt/deepwave/AIR-T_QuickStart_Apps/inference ~/

Performing Model Optimization with TensorRT¶

We will optimize the network in avg_pow_net.onnx using NVIDIA's TensorRT. This step may be done by running the onnx2plan.py program found in the airstack-examples repository,

cd ~/inference; python onnx2plan.py

pytorch/avg_pow_net.plan. The .plan file is an optimized version of the neural network that will be used for inference in the next steps. TensorRT Optimization Application Note - When training a neural network for execution on the AIR-T, make sure that the layers being used are supported by your version of TensorRT. To determine what version of TensorRT is installed on your AIR-T, open a terminal and run:

$ dpkg -l | grep TensorRT

The supported layers for your version of TensorRT may be found in the TensorRT SDK Documentation under the TensorRT Support Matrix section.

Understanding the Inference Code¶

The inference code itself is contained in the file run_airt_inference.py, which first defines the top-level inference settings for the neural network and radio as shown in the excerpt below. Note that these settings are correct for the neural network used in this tutorial but, in general, will depend on the structure of the model.

import numpy as np

import trt_utils

from SoapySDR import Device, SOAPY_SDR_RX, SOAPY_SDR_CF32, SOAPY_SDR_OVERFLOW

# Top-level inference settings.

CPLX_SAMPLES_PER_INFER = 2048 # Half input_len from the neural network

PLAN_FILE_NAME = 'pytorch/avg_pow_net.plan' # File created from uff2plan.py

BATCH_SIZE = 128 # Less than or equal to max_batch_size from uff2plan.py

NUM_BATCHES = 16 # Batches to run. Use float('Inf') to run continuously

# Top-level SDR settings.

SAMPLE_RATE = 7.8125e6 # AIR-T sample rate

CENTER_FREQ = 2400e6 # AIR-T Receiver center frequency

CHANNEL = 0 # AIR-T receiver channel

As a side note, the trt_utils import is a provided utility to manage the device mapped memory that enables the AIR-T's zero-copy feature.

Inference Loop¶

The code below will perform the following operations:

- Allocate a shared memory buffer on the AIR-T using pyCUDA

- Initialize the neural network using TensorRT

- Initialize and set up the AIR-T Radio

- Receive samples for a fixed number of batches in a while loop to:

- Read data into the buffer from the RF front end

- Test to ensure that the data was received properly

- Feed the data buffer into the neural network for inference

- Test if the neural network computation matches what is expected.

Here is the source code for the inference loop:

def main():

# Allocate a shared memory buffer on the AIR-T using pyCUDA

trt_utils.make_cuda_context()

buff_len = 2 * CPLX_SAMPLES_PER_INFER * BATCH_SIZE

sample_buffer = trt_utils.MappedBuffer(buff_len, np.float32)

# Initialize the neural network using TensorRT

dnn = trt_utils.TrtInferFromPlan(PLAN_FILE_NAME, BATCH_SIZE, sample_buffer)

# Initialize and setup the AIR-T Radio

sdr = Device()

sdr.setGainMode(SOAPY_SDR_RX, CHANNEL, True)

sdr.setSampleRate(SOAPY_SDR_RX, CHANNEL, SAMPLE_RATE)

sdr.setFrequency(SOAPY_SDR_RX, CHANNEL, CENTER_FREQ)

rx_stream = sdr.setupStream(SOAPY_SDR_RX, SOAPY_SDR_CF32, [CHANNEL])

sdr.activateStream(rx_stream)

# Receive samples for a fixed number of batches in a while loop

print('Receiving Data')

ctr = 0

while ctr < NUM_BATCHES:

try:

# Read data in to the buffer from the RF front end

sr = sdr.readStream(rx_stream, [sample_buffer.host], buff_len)

# Test to ensure that the data was received properly

if sr.ret == SOAPY_SDR_OVERFLOW:

print('O', end='', flush=True)

continue

# Feed the data buffer into the neural network for inference

dnn.feed_forward()

# Test if the neural network computation matches what is expected.

output_arr = dnn.output_buff.host

if not passed_test(sample_buffer.host, output_arr):

raise ValueError('Neural network output does not match numpy')

except KeyboardInterrupt:

break

ctr += 1

sdr.closeStream(rx_stream)

if ctr == NUM_BATCHES:

print('SUCCESS! All inference output values matched expected values!')

Result Validation¶

To test that the computation from Equation (3) matches that of Equation (2) we will create a function (shown below) that uses NumPy to calculate Equation (2) and that compares the result to the neural network computation.

def passed_test(buff_arr, result):

""" Make sure numpy calculation matches TensorFlow calculation. Returns True

if the numpy calculation matches the TensorFlow calculation"""

buff = buff_arr.reshape(BATCH_SIZE, -1) # Reshape so 1st dim is batch_size

sig = buff[:, ::2] + 1j*buff[:, 1::2] # Convert to complex valued array

wlen = float(sig.shape[1]) # Normalization factor

np_result = np.sum((sig.real**2) + (sig.imag**2), axis=1) / wlen

return np.allclose(np_result, result)

Running the Example Code¶

First, make sure that you have:

- Enabled the airstack Conda environment that was created in a previous step

- Optimized the model to create the

pytorch/avg_pow_net.planfile

To perform inference using that plan file, run the following:

cd ~/inference; python run_airt_inference.py

(airstack) $ cd ~/inference; python run_airt_inference.py

[TensorRT] INFO: [MemUsageChange] Init CUDA: CPU +234, GPU +0, now: CPU 264, GPU 3322 (MiB)

[TensorRT] INFO: Loaded engine size: 0 MB

[TensorRT] INFO: [MemUsageSnapshot] deserializeCudaEngine begin: CPU 264 MiB, GPU 3322 MiB

[TensorRT] VERBOSE: Using cublas a tactic source

[TensorRT] INFO: [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +167, GPU +169, now: CPU 432, GPU 3491 (MiB)

[TensorRT] VERBOSE: Using cuDNN as a tactic source

[TensorRT] INFO: [MemUsageChange] Init cuDNN: CPU +250, GPU +249, now: CPU 682, GPU 3740 (MiB)

[TensorRT] INFO: [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +0, now: CPU 681, GPU 3740 (MiB)

[TensorRT] VERBOSE: Deserialization required 1797501 microseconds.

[TensorRT] INFO: [MemUsageSnapshot] deserializeCudaEngine end: CPU 681 MiB, GPU 3740 MiB

[TensorRT] INFO: [MemUsageSnapshot] ExecutionContext creation begin: CPU 681 MiB, GPU 3740 MiB

[TensorRT] VERBOSE: Using cublas a tactic source

[TensorRT] INFO: [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +0, now: CPU 681, GPU 3740 (MiB)

[TensorRT] VERBOSE: Using cuDNN as a tactic source

[TensorRT] INFO: [MemUsageChange] Init cuDNN: CPU +0, GPU +0, now: CPU 681, GPU 3740 (MiB)

[TensorRT] VERBOSE: Total per-runner device memory is 0

[TensorRT] VERBOSE: Total per-runner host memory is 624

[TensorRT] VERBOSE: Allocated activation device memory of size 2098176

[TensorRT] INFO: [MemUsageSnapshot] ExecutionContext creation end: CPU 681 MiB, GPU 3740 MiB

TensorRT Inference Settings:

Batch Size : 128

Explicit Batch : True

Input Layer

Name : input_buffer

Shape : (128, 4096)

dtype : float32

Output Layer

Name : output_buffer

Shape : (128, 1)

dtype : float32

Receiver Output Size : 524,288 samples

TensorRT Input Size : 524,288 samples

TensorRT Output Size : 128 samples

Receiving Data

SUCCESS! All inference output values matched expected values!

[TensorRT] INFO: [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +0, now: CPU 686, GPU 3746 (MiB)

Next Steps¶

The goal of this tutorial was to demonstrate the step-by-step process to perform neural network inference. The airstack-examples repo provides all of the source code necessary to properly allocate shared memory buffers (using pyCUDA) and feed signal data from the AIR-T's radio to a neural network for inference.

We encourage users to apply the lessons learned in this tutorial to use the AIR-T to create their own neural network for inference.