AirStack Software¶

The AIR-T comes pre-loaded with a full software stack, AirStack. AirStack includes all the components necessary to utilize the AIR-T, such as an Ubuntu based operating system, AIR-T specific device drivers, and the FPGA firmware. The operating system is based off of NVIDIA JetPack SDK and is upgraded periodically. Please check for the latest software at www.deepwavedigital.com.

Application Programming Interfaces¶

Applications for the AIR-T may be developed using almost any software language, but C/C++ and Python are the primary supported languages. Various Application Programming Interfaces (APIs) are supported by AirStack and a few of the most common APIs are described below.

Hardware Control¶

SoapyAIRT¶

SoapySDR is the primary API for interfacing with the AIR-T via the SoapyAIRT driver. SoapySDR is an open-source API and run-time library for interfacing with various SDR devices. The AirStack environment includes the SoapySDR and the SoapyAIRT driver to enable communication with the radio interfaces using Python or C++. The Python code below provides an operational example of how to receive data from the radio using the SoapyAIRT driver.

#!/usr/bin/env python3

from SoapySDR import Device, SOAPY_SDR_RX, SOAPY_SDR_CS16

import numpy as np

sdr = Device(dict(driver="SoapyAIRT")) # Create AIR-T instance

sdr.setSampleRate(SOAPY_SDR_RX, 0, 125e6) # Set sample rate on chan 0

sdr.setGainMode(SOAPY_SDR_RX, 0, True) # Use AGC on channel 0

sdr.setFrequency(SOAPY_SDR_RX, 0, 2.4e9) # Set frequency on chan 0

buff = np.empty(2 * 16384, np.int16) # Create memory buffer

stream = sdr.setupStream(SOAPY_SDR_RX,

SOAPY_SDR_CS16, [0]) # Setup data stream

sdr.activateStream(stream) # Turn on the radio

for i in range(10): # Receive 10x16384 windows

sr = sdr.readStream(stream, [buff], 16384) # Read 16384 samples

rc = sr.ret # Number of samples read

assert rc == 16384, 'Error code = %d!' % rc # Make sure no errors

s0 = buff.astype(float) / np.power(2.0, 15) # Scaled interleaved signal

s = s0[::2] + 1j*s0[1::2] # Complex signal data

# <Insert code here that operates on s>

sdr.deactivateStream(stream) # Stop streaming samples

sdr.closeStream(stream) # Turn off radio

UHD¶

A key feature of SoapySDR is its ability to translate to/from other popular SDR APIs, such as UHD. The SoapyUHD plugin is included with AirStack and enables developers to create applications using UHD or execute existing UHD-based applications on the AIR-T. This interface is described in the figure below.

flowchart LR

%% Define Shapes

subgraph AIRSTACK[**AirStack**]

direction LR

A[**SoapyAIRT**<br>Deepwave]

B[**SoapySDR**<br>3rd Party]

C[**UHD**<br>3rd Party]

end

%% Define Connections

A <==> B <==> C

If you are interested in using UHD for your application, please see the associated AirStack Application Notes for details regarding compatibility and other important considerations.

Signal Processing¶

Python Interfaces¶

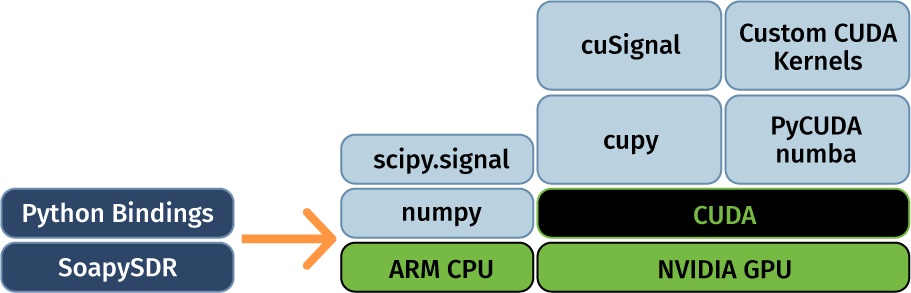

The figure below illustrates supported Python APIs that can be used to develop signal processing applications on both the CPU and GPU of the AIR-T. In general, these have been selected because they have modest overhead compared to native code and are well suited to rapid prototyping. In addition, C++ interfaces are provided for many control and processing interfaces to the AIR-T for use in performance-critical applications.

The table below outlines the common data processing APIs that are natively supported by AirStack, along with the supported GPP for each API. Some of these are included with AirStack, while some are available via the associated URL.

| Table 15 API | GPP | Description |

|---|---|---|

| numpy | CPU | numpy is one a common data analysis and processing Python module. URL: https://numpy.org/ |

| scipy.signal | CPU | SciPy is a scientific computing library for Python that contains a signal processing library, scipy.signal. URL: https://docs.scipy.org/doc/scipy/reference/signal.html |

| cupy | GPU | Open-source matrix library accelerated with NVIDIA CUDA that is semantically compatible with numpy. URL: https://cupy.chainer.org/ |

| cuSignal | GPU | Open-source signal processing library accelerated with NVIDIA CUDA based on scipy.signal. URL: https://github.com/rapidsai/cusignal |

| PyCUDA / numba | GPU | Python access to the full power of NVIDIA’s CUDA API URL: https://documen.tician.de/pycuda/ |

| Custom CUDA Kernels | GPU | Custom CUDA kernels may be developed and executed on the AIR-T |

GNU Radio¶

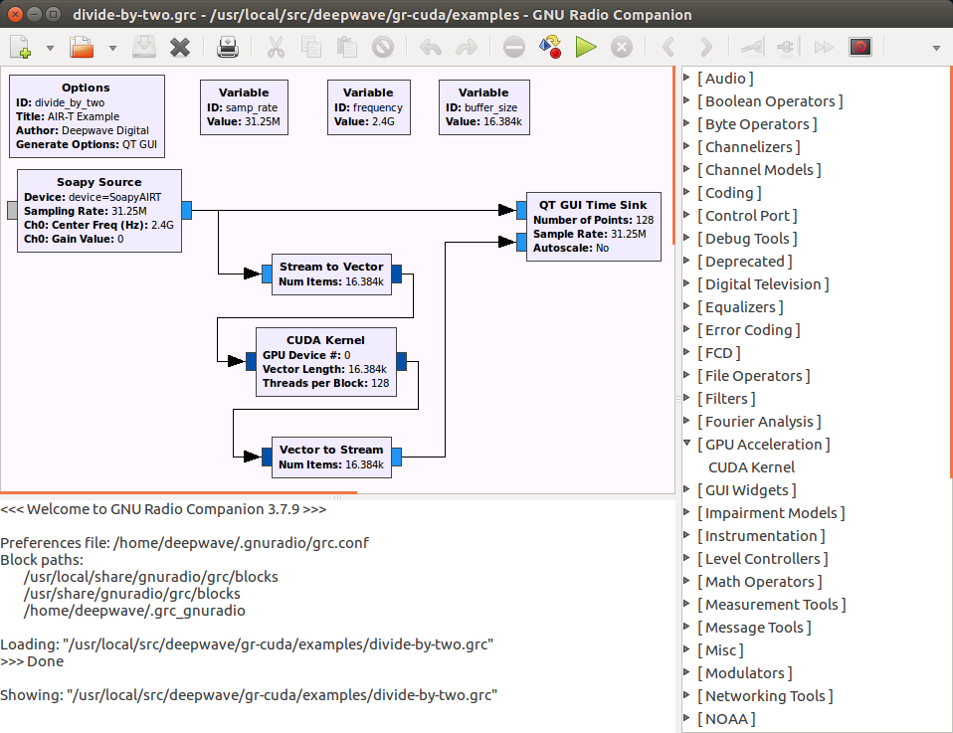

The AIR-T also supports GNU Radio, one of the most widely used open-source toolkits for signal processing and SDR. Included with AirStack, the toolkit provides modules for the instantiation of bidirectional data streams with the AIR-T’s transceiver (transmit and receive) and multiple DSP modules in a single framework. For a graphical interface to design SDR applications by connecting blocks together, GNU Radio Companion is available as shown in the figure below. GNU Radio is written in C++ and has Python bindings.

Like the majority of SDR applications, most functions in GNU Radio rely on CPU processing. Since many DSP engineers are already familiar with GNU Radio, two free and open source modules have been created for AirStack to provide GPU acceleration on the AIR-T from within GNU Radio. Gr-Cuda and gr-wavelearner, along with the primary GNU Radio modules for sending and receiving samples to and from the AIR-T, are shown in the table below and included with AirStack.

| Table 16 GNU Radio Module | Description |

|---|---|

| gr-cuda | A detailed tutorial for incorporating CUDA kernels into GNU Radio. URL: https://github.com/deepwavedigital/gr-cuda |

| gr-wavelearner | A framework for running both GPU-based FFTs and neural network inference in GNU Radio. URL: https://github.com/deepwavedigital/gr-wavelearner |

| gr-uhd | The GNU Radio module for supporting UHD devices. URL: https://github.com/gnuradio/gnuradio/tree/master/gr-uhd |

| gr-soapy | Vendor neutral set of source/sink blocks for GNU Radio. URL: https://gitlab.com/librespacefoundation/gr-soapy |

Deep Learning¶

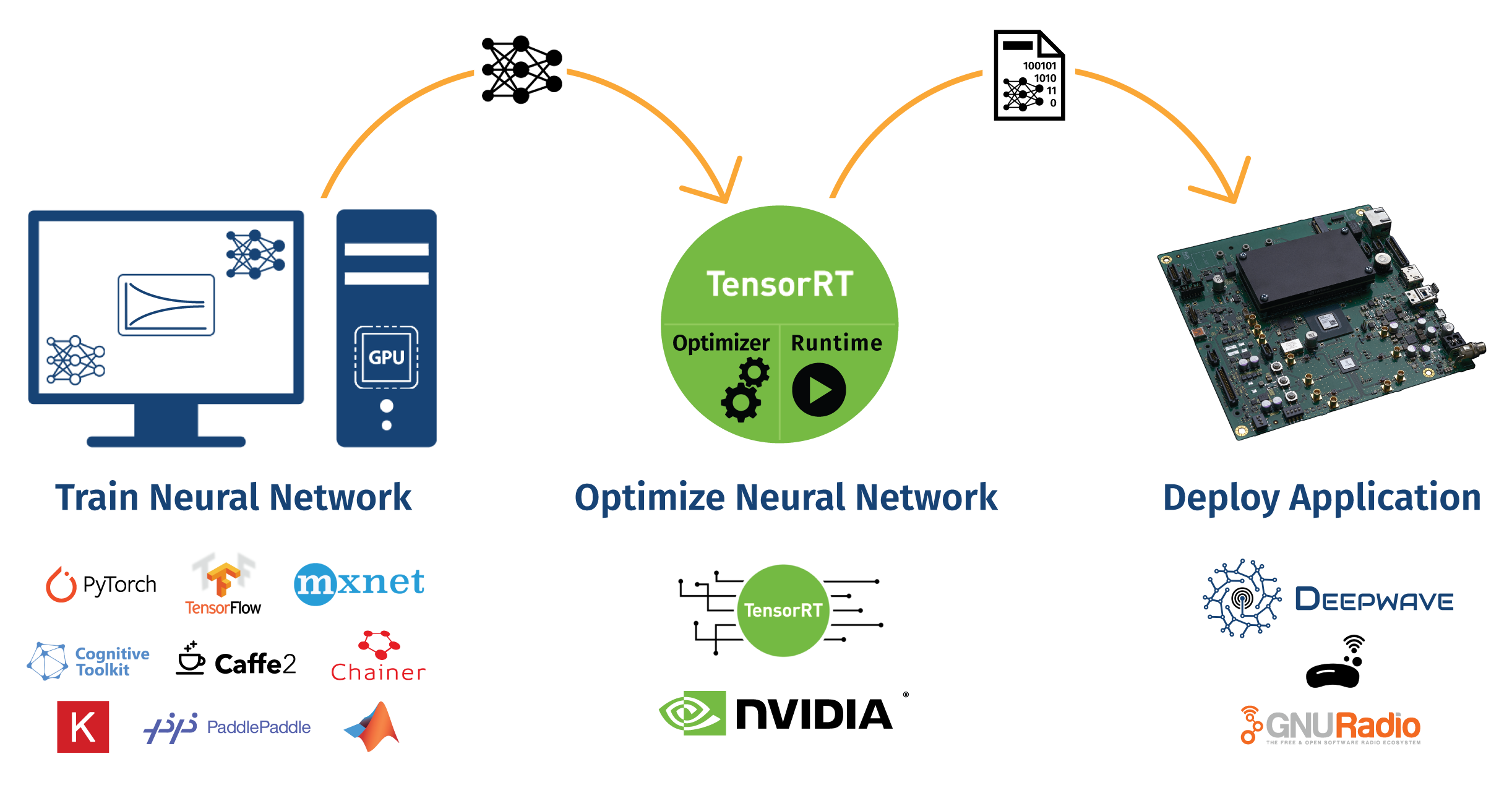

The workflow for creating a deep learning application for the AIR-T consists of three phases: training, optimization, and deployment. These steps are illustrated in the figure below and covered in the proceeding sections.

AirPack is an add-on software package (not included with the AIR-T) that provides source code for the complete training-to-deployment workflow described in this section. More information about AirPack may be found here: https://deepwavedigital.com/software-products/airpack/.

Training Frameworks¶

The primary inference library used on the AIR-T is NVIDIA’s TensorRT. TensorRT allows for optimized interference to run on the AIR-T’s GPU. TensorRT is compatible with models trained using a wide variety of frameworks as shown below.

| Table 17 Deep Learning Framework | Description | TensorRT Support | Programming Languages |

|---|---|---|---|

| TensorFlow | Google’s deep learning framework URL: www.tensorflow.org/ | UFF, ONNX | Python, C++, Java |

| PyTorch | Open source deep learning framework maintained by Facebook URL: www.pytorch.org/ | ONNX | Python, C++ |

| MATLAB | MATLAB has a Statistics and Machine Learning Toolbox and a Deep Learning Toolbox URL: www.mathworks.com/solutions/deep-learning.html | ONNX | MATLAB |

| CNTK | Microsoft’s open source Cognitive Toolkit. URL: docs.microsoft.com/cognitive-toolkit/ | ONNX | Python, C#, C++ |

When training a neural network for execution on the AIR-T, make sure that the layers being used are supported by your version of TensorRT. To determine what version of TensorRT is installed on your AIR-T, open a terminal and run:

$ dpkg -l | grep TensorRT

The supported layers for your version of TensorRT may be found in the TensorRT SDK Documentation under the TensorRT Support Matrix section.

Optimization Frameworks¶

Once a model is trained (and saved in the file formats listed in the table above), it must be optimized to run efficiently on the AIR-T. The primary function of the AIR-T is to be an edge-compute inference engine for the real-time execution of deep learning applications. This section discusses the supported framework(s) for optimizing a DNN for deployment on the AIR-T.

TensorRT - The primary method for executing a deep learning algorithm on the AIR-T’s GPU is to use NVIDIA’s TensorRT inference accelerator software. This software will convert a trained neural network into a series of GPU operations, known as an inference engine. The engine can then be saved and used for repeated inference operations.

Deployment on the AIR-T¶

After the neural network has been optimized, the resulting inference engine may be loaded into a user’s inference application using either the C++ or Python TensorRT API. The user’s inference application is responsible for passing signals from the radio to the inference engine and reading back the results of inference. If using GNU Radio, the GR-Wavelearner library provides a mechanism for building an inference application within a GNU Radio flowgraph.

Deep Learning Deployment Source Code¶

Deepwave provides a source code toolbox to demonstrate the recommended training to inference workflow for deploying a neural network on the AIR-T: AIR-T Deep Learning Inference Examples.

The toolbox demonstrates how to create a simple neural network for Tensorflow and PyTorch on a host computer. Installation of these packages for training is made easy by the inclusion .yml file to create an Anaconda environment. For the inference execution, all python packages and dependencies are pre-installed on AIR-Ts running AirStack 0.3.0+.

In the above code, the example neural network model inputs an arbitrary length input buffer and has only one output node that calculates the average of the instantaneous power across each batch for the input buffer. While this network is not a typical neural network model, it is an excellent example of how customers may deploy their trained models on the AIR-T for inference.